What Is a Large Language Model?

A large language model (LLM) is a type of artificial intelligence (AI) that’s designed to understand and generate human language. It uses neural networks—computing systems inspired by the human brain—to process large amounts of text and detect and learn language patterns.

Large language models are trained on massive datasets and work by predicting the next word in a sequence. This allows them to output coherent responses.

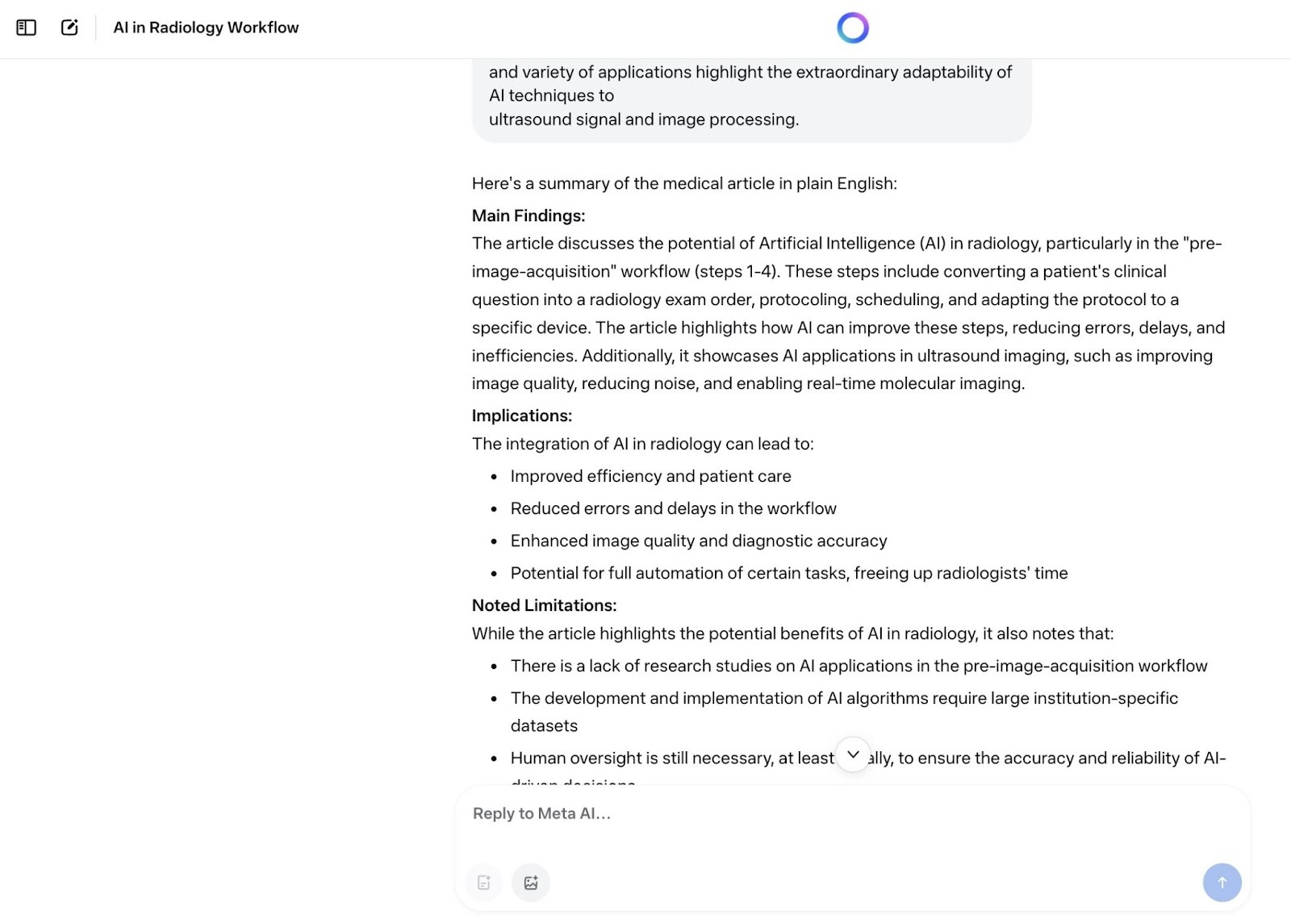

Tools built on LLMs can perform a variety of tasks without getting task-specific training. For example, they can translate or summarize text, answer questions, or provide coding help.

How Do People Use Large Language Models?

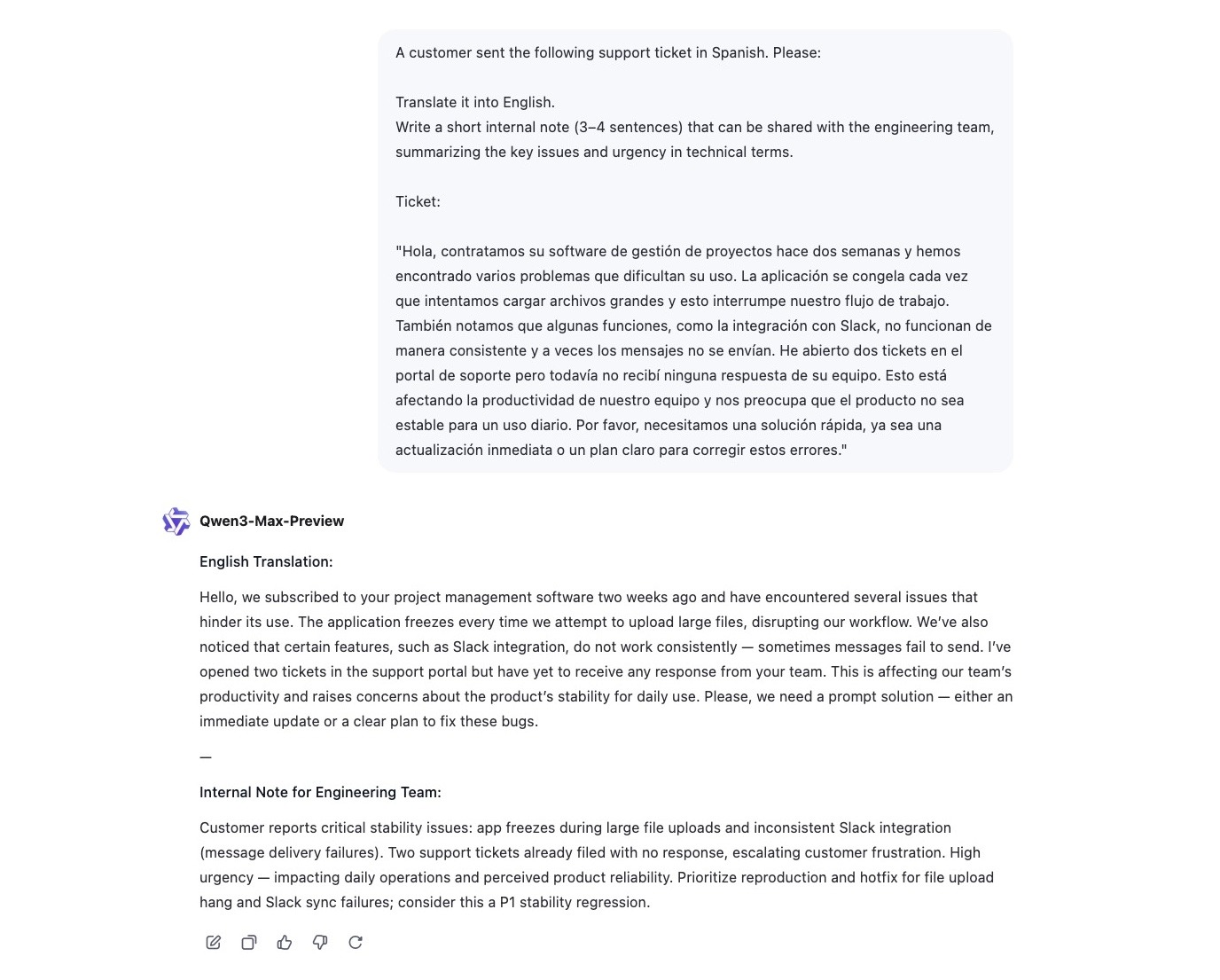

We surveyed 200 consumers to find out how they’re using LLMs. Here’s what we found out: Just under 60% of people use AI tools powered by LLMs on a daily basis.

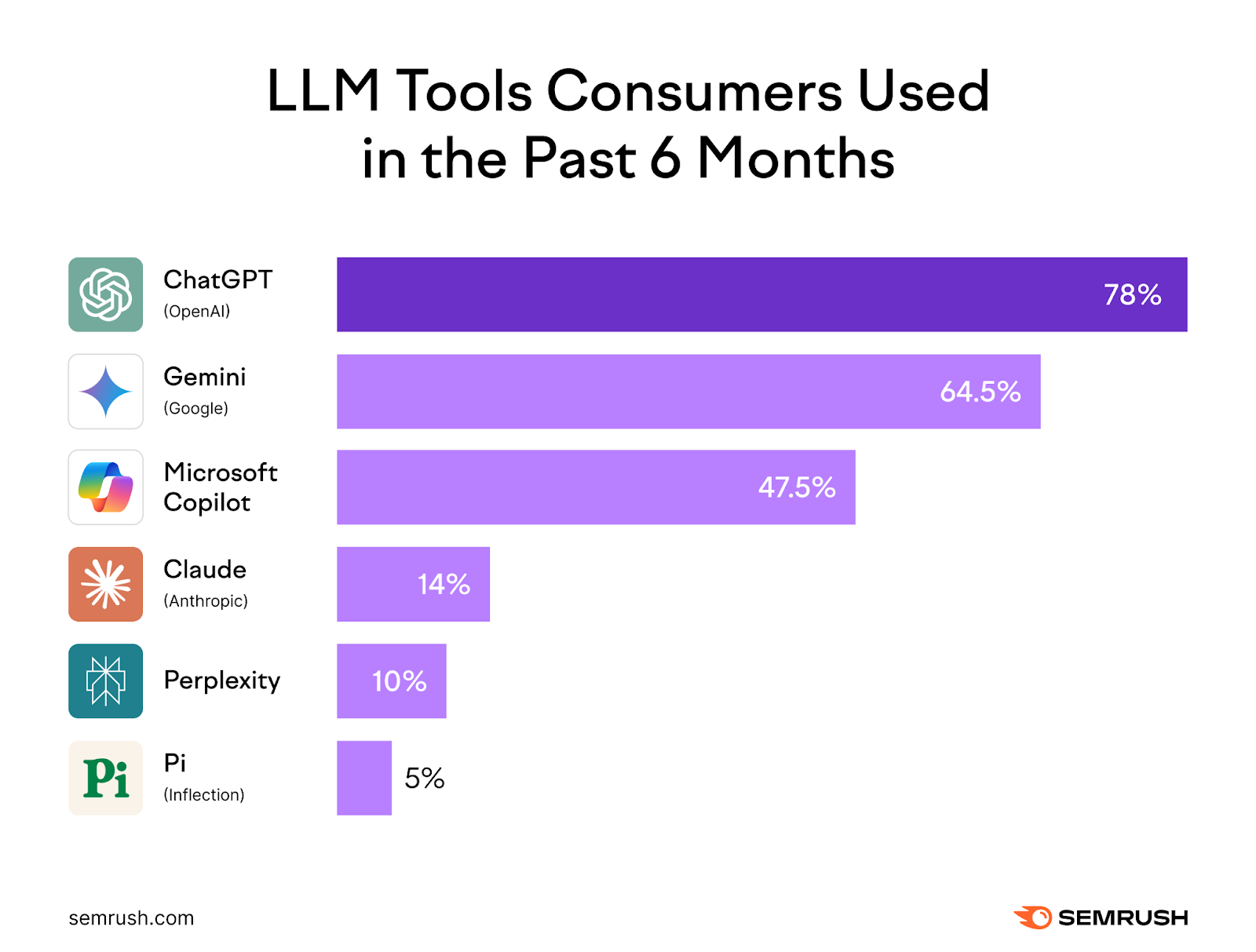

Among polled people who use LLM tools, the most popular tools include ChatGPT (78%), Gemini (64%), and Microsoft Copilot (47%).

Research and summarization was the most common use case among respondents, with 56% of consumers saying they use LLMs or LLM tools for these tasks.

Other popular use cases include:

- Creative writing and ideation (45%)

- Entertainment and casual questions (42%)

- Productivity-related tasks such as drafting emails and notes (40%)

When it comes to choosing an LLM or tool, the qualities people value the most include accuracy, speed/latency, and the ability to handle long prompts.

Almost half of our respondents (48%) say they pay for LLMs or LLM-powered tools, either personally or through their employers. In most cases, this means they’re paying for tools like ChatGPT or Copilot, which are built on top of LLMs.

Top 8 Large Language Models

Here’s a quick overview of the most popular large language models:

| Model | Developer | Release Date | Max Context Window | Best For |

| GPT-5 | OpenAI | Aug 2025 | 400K | General performance |

| Claude Sonnet 4 | Anthropic | May 2025 | 1M | Long-context tasks |

| Gemini 2.5 | Google DeepMind | Mar 2025 | 1M | Large-scale, multimodal analysis |

| Mistral Large 2.1 | Mistral AI | Feb 2024 | 128K | Open-weight commercial use |

| Grok 4 | xAI | Jul 2025 | 256K | Real-time web context |

| Command R+ | Cohere | Apr 2024 | 128K | Fact-based retrieval tasks |

| Llama 4 | Meta AI | Apr 2025 | 10M | Open-source customization |

| Qwen3 | Alibaba Cloud | Apr 2025 | 128K | Multilingual enterprise tasks |

Note that you’ll typically only get the maximum context windows if you use the LLM’s API. Context windows in apps/chatbots are generally smaller.

Let’s look at each one in more detail in our list of large language models below.

1. GPT-5

Developer: OpenAI

Released: August 2025

Context window: 400,000 tokens

Best for: General performance

GPT-5 is the model behind ChatGPT, which is considered by many to be the gold standard for general-purpose AI thanks to its ability to handle a variety of input types (including text, images, and audio) within the same conversation.

This lines up with our survey findings: 78% of respondents say they’ve used ChatGPT in the past six months.

It performs consistently well across a wide range of tasks, from creative writing to technical problem-solving.

GPT-5 is also embedded into Microsoft Copilot and various other third-party tools. These integrations ensure GPT-5 is one of the most widely used LLMs.

Strengths

- Highly versatile across a variety of use cases

- Strong reasoning abilities and high accuracy

- Suitable for complex workflows thanks to multimodal input (text, audio, images) and output capabilities

- Large integration ecosystem (ChatGPT, Copilot, third-party apps)

Drawbacks

- Less customizable compared to open-source models

- More expensive than open-weight models

Further reading: GPT-5 Rolls Out: What the New Model Means for Marketers

2. Claude Sonnet 4

Developer: Anthropic

Released: May 2025

Context window: 1 million tokens

Best for: Long-context tasks

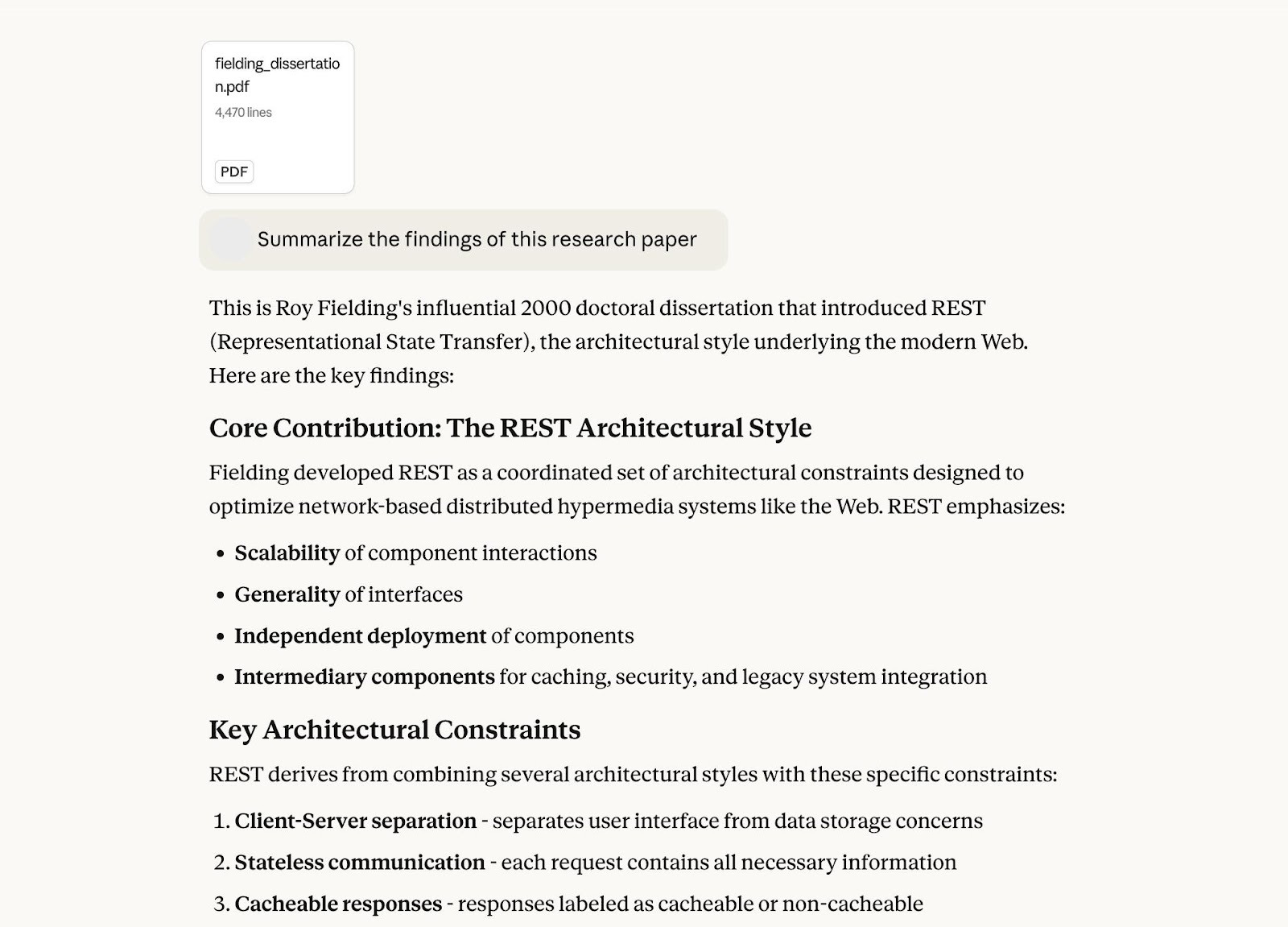

Claude Sonnet 4 is Anthropic’s flagship model, known for its ability to handle long and complex inputs. Its context window of 1 million tokens allows it to analyze large reports, codebases, or entire books in one go.

(Claude Opus 4 is a more powerful model for some tasks, but it has a smaller context window of 200K tokens.)

Claude Sonnet 4 is trained using Anthropic’s “constitutional AI” framework, which puts an emphasis on honesty and safety. This makes Claude particularly useful for sensitive industries like healthcare or legal.

Strengths

- Huge context window (1M tokens)

- Constitutional AI framework makes it safer by design

- Trustworthy model for regulated industries

Drawbacks

- May sometimes refuse to handle borderline or grey-area queries that other models attempt to solve (e.g., asking Claude to write a highly critical piece on a competitor)

- Slower response times compared to lighter-weight models

- Limited customization due to being a proprietary (closed source) model

3. Gemini 2.5

Developer: Google DeepMind

Released: March 2025

Context window: 1 million tokens

Best for: Large-scale document analysis

Gemini 2.5 is Google DeepMind’s LLM, which is designed to process different types of input (text, images, code, audio, and video) in the same prompt. This makes it a highly versatile LLM suitable for complex, cross-format tasks.

Gemini 2.5 can handle large workflows, such as analyzing or searching through entire databases and document archives in a single session.

And Gemini 2.5 available directly in Google Workspace. So you can use it in tools like Docs, Sheets, and Gmail.

Strengths

- Excels at handling multimodal inputs consisting of text, images, code, video, and audio

- 1M context window makes it suitable for large-scale analysis

- Google Workspace integration makes it easy to use in everyday workflows

Drawbacks

- Limited customization due to being a closed-source model

- Less flexible for users whose workflows rely heavily on non-Google tools

4. Mistral Large 2.1

Developer: Mistral AI

Released: November 2024

Context window: 128,000 tokens

Best for: Open-weight commercial use

Mistral Large 2.1 is a commercial open-weight model, meaning it’s available for businesses to run using their own infrastructure. This makes it a great choice for organizations that require more control over their data.

Strengths

- Provides more control over customization and data security due to its open-weight and transparent nature

- Offers flexible deployment through self-hosting or cloud APIs

- Cost-efficient for high-volume use cases and enterprise-scale applications

Drawbacks

- Smaller context window compared to models like Claude and Gemini

- Requires more technical setup and infrastructure

5. Grok 4

Developer: xAI

Released: July 2025

Context window: 128,000 tokens (in-app), 256,000 tokens through the API

Best for: Real-time web context

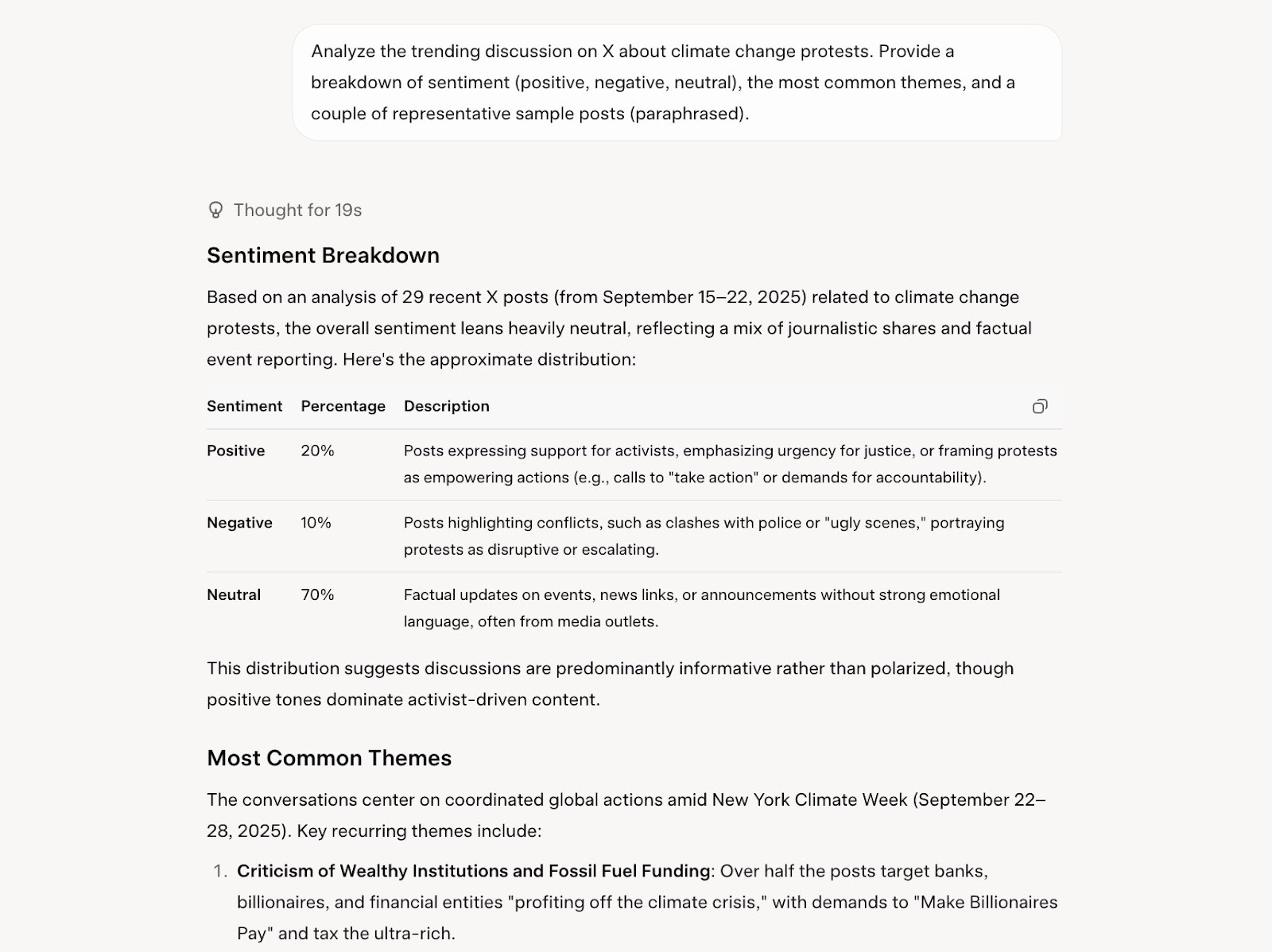

Grok 4 is an LLM that’s marketed as an AI assistant and is integrated natively into the X social platform (formerly Twitter).

This gives it access to live social data, including trending posts. And it makes Grok especially useful for users looking to stay on top of news, monitor and analyze online sentiment, or identify emerging trends.

Strengths

- Real-time access to social media data

- Relatively large context window (256,000 tokens through the API)

- Native integration with X

Drawbacks

- Limited usefulness outside of the X ecosystem

- Lack of customization options due to its proprietary nature

6. Command R+

Developer: Cohere

Released: April 2024

Context window: 128,000 tokens

Best for: Retrieval-augmented generation

Command R+ is a large language model that’s designed to pull information from external sources (like APIs, databases, or knowledge bases) while answering a prompt.

Since Command R+ doesn’t rely solely on its training data and can query other sources, it’s less likely to provide incorrect or made-up answers (known as hallucinations).

Command R+ also supports more than 10 major languages (including English, Chinese, French, and German). This makes it a strong choice for global businesses that manage multilingual data.

Strengths

- Sourced-backed answers and reduced hallucinations

- Multilingual supports across 10+ major languages

- Transparency and reliability for fact-based queries

Drawbacks

- Needs integration with external data sources to realize its full potential

- Has a smaller ecosystem compared to models like GPT-5

- Less suited for creative tasks

7. Llama 4

Developer: Meta AI

Released: April 2025

Context window: 10 million tokens

Best for: Tasks requiring pre-trained and instruction-tuned weights

Llama 4 is an open-source model from Meta that anyone can download and use without having to pay licensing fees.

Llama 4 offers pre-trained and instruction-tuned weights (fine-tuned to follow instructions more reliably) for public use. This gives users the flexibility to either build on top of the base model or opt for a version that’s already optimized for everyday use cases.

Llama 4 supports both text and visual tasks across 8+ languages.

Strengths

- Open-source nature makes it free to use, integrate, and customize your own AI agents

- 10M-token context window allows for very large inputs

- Strong community and rapid ecosystem growth

Drawbacks

- Technical expertise needed to fine-tune the model effectively

- Less polished than consumer-facing models like GPT-5

- Limited customer support

Llama 4 is a good choice for enterprises and developers that need a customizable and scalable model that they have full control over (e.g., for AI agent development or research-heavy use cases).

8. Qwen3

Developer: Alibaba Cloud

Released: April 2025

Context window: 128,000

Best for: Multi-language tasks

Qwen3 is a large language model from Alibaba that supports over 25 languages and is well-suited for companies that operate across multiple regions.

Qwen3 can handle long conversations, support tickets, and lengthy business documents without loss of context.

Strengths

- Strong multilingual support

- Enterprise-friendly design makes it suitable for use across large organizations

- Offers a good balance between performance and resource use thanks to efficient Mixture-of-Experts (MoE) architecture that routes tasks to the proper neural networks

Drawbacks

- Relatively small context window compared to other leading models

- Less suitable for highly creative tasks

What to Look for When Comparing LLMs

Use these criteria to determine the right LLM for your needs:

Use Fit: Creative, Technical, or Conversational

Some models are better suited for certain use cases than others:

- GPT-5, Claude Sonnet 4, and Gemini 2.5 are great for creative tasks like writing or ideation

- Qwen3 and Grok 4 excel at coding and math-related tasks

- Mistral Large 2.1 and Command R+ are best suited for analyzing large documents

Opt for a model with strengths that best match your intended use case.

Cost, Licensing, and Deployment Options

The cost of using an LLM depends on token pricing, hosting method (e.g., open-weight, cloud API, or self-hosted), and licensing terms.

Costs can vary widely between different LLMs.

You can self-host open-weight models such as Llama 4 and Mistral Large 2.1. This often makes them more cost-effective. But it also means they require more setup and ongoing maintenance.

On the other hand, models like GPT-5 and Claude Sonnet 4 are often easier to use. But they can come with higher costs if you run a high volume of queries.

Here’s a quick overview of (API) token costs across different models (including two options for Claude and Llama) at the time of writing this article:

| Model | Input Token Cost (per 1M tokens) | Output Token Cost (per 1M tokens) |

| GPT-5 | $1.25/1M tokens | $10.00/1M tokens |

| Claude Opus 4 | $15/1M tokens | $75 / 1M tokens |

| Claude Sonnet 4 | $3/1M tokens | $15/1M tokens |

| Gemini 2.5 Pro | $1.25/1M tokens (≤ 200K) → $2.50/1M tokens (>200K) | $10/1M tokens (≤ 200K) → $15/1M tokens (>200K) |

| Mistral Large 2.1 | $2.00/1M tokens | $6.00/1M tokens |

| Grok 4 | $3.00/1M tokens | $15.00/1M tokens |

| Command R+ | $3.00/1M tokens | $15.00/1M tokens |

| Llama 4 (Scout) | $0.15/1M tokens | $0.50/1M tokens |

| Llama 4 (Maverick) | $0.22/1M tokens | $0.85/1M tokens |

| Qwen 3 | $0.40/1M tokens | $0.80/1M tokens |

Note that token costs frequently change as developers update the models.

Context Window and Speed

An LLM’s context window determines how much information it can process and remember from a single prompt.

If you’re looking to analyze large datasets or lengthy documents, you’ll want to choose a model with a large context window (like Gemini 2.5).

In case you plan on using the LLM’s capabilities within an app you’re developing and need real-time results, make sure you also consider the model’s inference latency.

Inference latency essentially refers to how quickly a model generates an answer after you submit a prompt.

Model Capabilities and Benchmark Scores

If sheer performance is a priority, look at model performance based on popular benchmark scores like:

- MMLU: Tests a model’s general reasoning across academic subjects

- GSM8K: Measures a model’s math problem-solving abilities

- HumanEval: Evaluates a model’s coding skills

- HELM: Based on a holistic evaluation of a model across multiple dimensions (including bias, fairness, and robustness)

You can see these scores across models in LiveBench’s LLM leaderboard. The scores can give you a general sense of a model’s capabilities.

Get the Most Out of Large Language Models

The key to choosing the right LLM is in considering your actual needs. Whether you’re building an internal tool, trying to incorporate AI into your existing workflow, or developing AI-powered features for your software.

Curious how your website content might appear in these LLMs? Check out our guide to the best LLM monitoring tools.